Data-Driven, Goal-Oriented: Collecting Student Feedback that Matters

This has been the year of feedback at GOA. Among our faculty, we’ve focused on building a culture of feedback and a pedagogy of transparency. As a result, faculty are laser-focused on improving and enriching the feedback they give to students in the name of more powerful learning.

But what about the feedback we get from our students?

How might we gain new insights from our students? What is it that we truly need to know so we can act? Recently, I led a project to reimagine GOA’s twice-a-semester student surveys, which haven’t changed much since we launched in 2011. As the NAIS 2018 Winter Magazine highlighted, many schools are having conversations about data and how it can better help them understand their students. In light of this collective interest in learning more about students’ experiences through data collection, let me share our process in reimagining our student surveys; including five strategies for making the process and results meaningful for you and your school.

Know the goals.

If you’re interested in learning something new and useful, it’s important to articulate what might be “new” and “useful” so you can design inquiry for the insights that enable action. Key questions: What will this data enable you to do? To decide? To change?

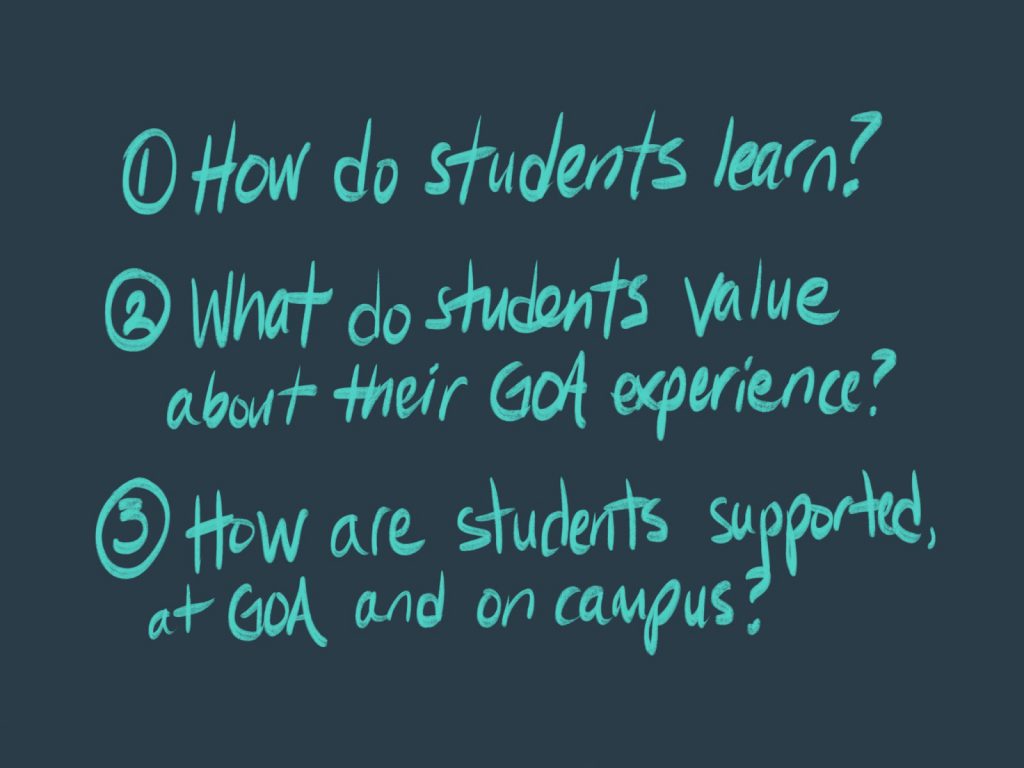

At GOA, we discussed a few approaches to what we dubbed our “Student Feedback Methodology Revision Project” (catchy, I know). Ultimately, we committed to a design thinking process. To begin, we inventoried our student feedback data and sources, from there we discussed current and potential uses for student feedback. In our case, based on early field research and interviews, we planned to make the shift from student feedback surveys used primarily to serve the teacher to surveys designed to illuminate new trends about the GOA experience beyond and across courses. Informed by conversations with teachers, school Site Directors, and instructional designers, we distilled our themes to:

We decided that every line of inquiry in our revised approach should be able to map back to one or more of these central questions.

Leverage Your Network.

Don’t reinvent the wheel. There are schools and organizations already doing this work and often ready to share what they’ve learned. I certainly found this to be the case when I spoke with The American School in Japan, Punahou School, and Mount Vernon Presbyterian School, interviewing them on their process, priorities, and pitfalls.

Our interviews led us to seek experts in the field of survey methodology, like Panorama Ed in partnership with Harvard Graduate School of Education, and specifically organizations that could offer us research-validated survey questions. For GOA, using questions that have been tested on large groups of students and proven to be asking what it is that we believed we were asking gave us a critical peace of mind that our data was sound. In the end, GOA decided to use a selection of questions from Panorama’s free student survey tool to inform our question scales. We plan to rotate questions over time as needed, giving us flexibility without sacrificing the integrity of longitudinal comparisons as we grow.

Pilot.

When you do settle on your questions, don’t skip the opportunity to get student insights on their clarity and purpose. We simply ended our surveys with the question, “Did any of these survey questions confuse you? If so, which ones and how?” Not only did those responses help us fine tune our questions, but they gave us tremendous confidence that we were measuring what we intended.

In addition to student feedback on the survey, we pilot usefulness of the data with our faculty and instructional design team. What feedback are teachers (and instructional coaches) finding most useful, most actionable? It was critical to not lose the feedback teachers wanted most from their students, and some early teacher feedback suggested we were doing just that. In response, teachers were encouraged to create more frequent, habitual course opportunities for feedback on some of the behavioral insights they were missing. As an instructional design team, we are also now testing the question banks feature in Canvas with teachers as a tool to support this related information gathering at the organizational level.

Analyze.

Once you’ve collected student feedback, it’s time to analyze and figure out next steps. At this stage, it’s worth considering what metrics matter most to your department, division, or school before diving into the analysis.

At GOA, we approach student feedback analysis on two levels, the first is: what does this student data mean for us as an organization? How does this intel impact how we support students and schools on the whole? How does this student feedback inform our professional learning offerings? Even more broadly, what is our student body teaching us about modern learning that we can apply and share beyond GOA?

The second level is: what does this student feedback mean for that course’s design and the teacher? Here, it’s important to set goals that are lean and clean. In some cases, teachers and their instructional designers will set goals to move a specific data point by the end of the next quarter (i.e. how many student report feeling a strong sense of connection to their teacher or how many students report that teacher feedback is moving their learning forward). In other cases, the student feedback reveals areas for improvement in course design that require more substantial iteration in “the off season” (aka the summer). In most cases, teachers and instructional designers identify a bit of both.

Don’t let perfect be the enemy of good.

This undertaking may have been a perfectionist’s nightmare. Take it from me, the now wiser fool, who at the onset was optimistic we could serve all needs with this revision of the GOA student survey. We’ve made clear progress on the survey front, but there will always be more needs than a single survey can address (survey fatigue) and there will always be wishlist items that will have to wait.

More importantly, the reality is that surveys are only one type of lens with which we can learn from students’ experiences. Assessing student’s work, conducting student interviews, shadowing a student, asking students to present to the community on their experience, soliciting feedback from secondary sources like coaches, advisors, parents, and of course faculty, are all methods that contribute to a richer understanding of what it’s like to be a student. It’s when we figure out how to combine and cross-compare data from an array of sources that insights and actionable feedback really begin to come into focus.

For more, see:

- All that Jazz: Using Feedback to Make Learning Visible

- Progress, not Praise: How to Design Feedback for Competency-Based Learning

- 14 Ways Technology Supports a Culture of Feedback

Global Online Academy (GOA) reimagines learning to empower students and teachers to thrive in a globally networked society. Professional learning opportunities are open to any educator. To sign up or to learn more, see our Professional Learning Opportunities for Educators or email hello@GlobalOnlineAcademy.org with the subject title “Professional Learning.” Follow us on Twitter @GOALearning. To stay up to date on GOA learning opportunities, sign up for our newsletter here.